Chunze LIN

Senior Research Scientist, SenseTime Research

Digital World Group, Digital Human Analysis

Haidian District, Beijing, China

Email: linchunze[at]sensetime.com

Biography

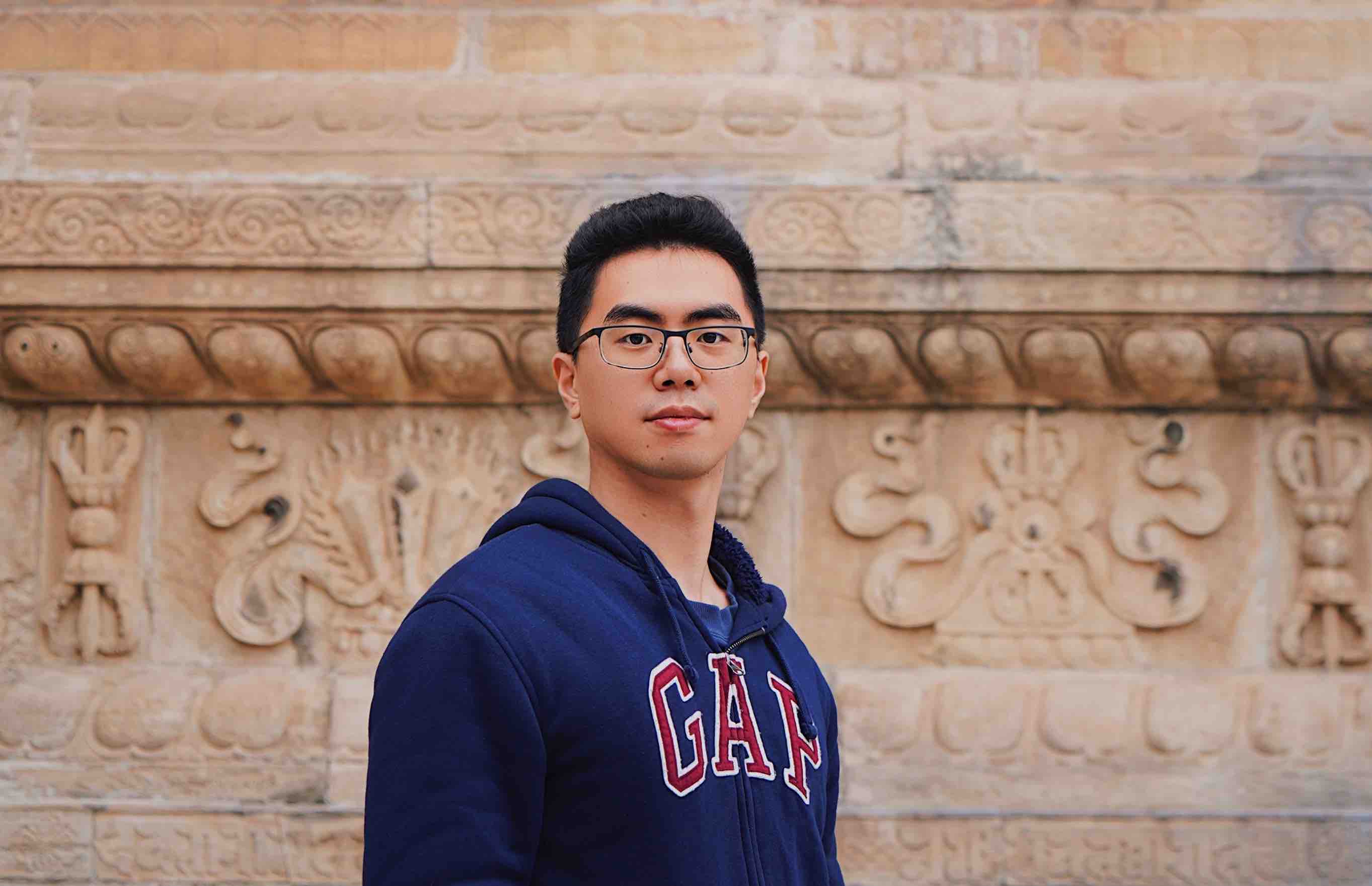

I am currently a Senior Research Scientist at SenseTime, Digital World Group. I am a tech-lead working with my team on 2D/3D avatar driven and generative models. If you are interested in applying for an internship or researcher/engineer positions related to talking head, generative models, 3D face reconstruction and animation, please contact me.

Previously, I received my M.Eng. degree from Tsinghua University in 2019, advised by Professor Jiwen Lu and Professor Jie Zhou. I also received my diplôme d'ingénieur from Ecole Centrale de Nantes, France in 2019 as a dual degree program.

Experiences

Senior Researcher

May 2022 - Present | SenseTime Research

Researcher

Aug 2019 - May 2022 | SenseTime Research

Research Associate

Sep 2017 - Aug 2019 | i-VisionGroup, Tsinghua University

Advised by Professor Jiwen Lu

Projects

Generative Models

Audio Driven Talking Head

Given text or audio, we generate the visual-audio synchronized 2D avatar. All parts of the head look natural when speaking without any weird mouth, teeth and nose movements.

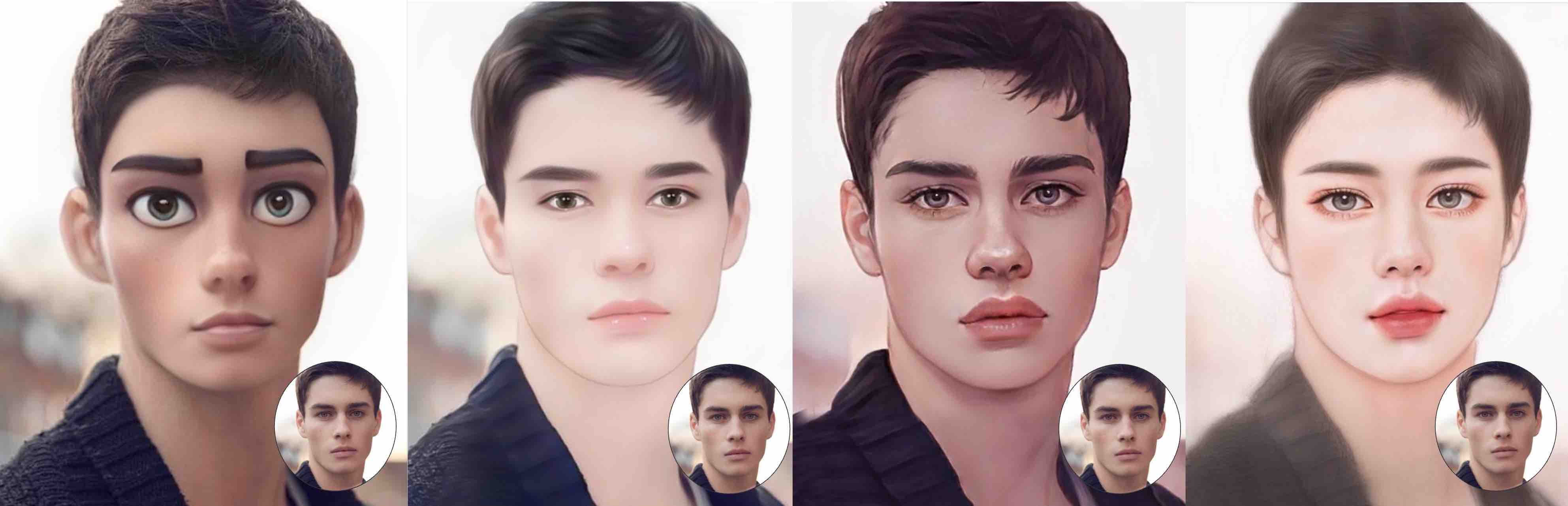

Face Stylization

This project converts the given image into a dozen of different styles while keeping the characteristics of the input person: Sketch, American Comic, Manga, Oil painting, CG style, Disney style, etc..

Face Beautification

Given a human face, we eliminate most flaws, wrinkles and dark circle on the face. We generate natural and realistic effect of the skin's texture and retain distinctive personal characteristics to avoid obvious airbrushing effects.

Facial Attributes Editing

I construct a GAN Studio based on the StyleGAN2 and disentangled latent vectors. With this GAN Studio, users can easily edit facial attributes by moving the sliders of different attributes and merge attributes from two facial images.

3D Reconstruction & Driven

3D Avatar Reconstruction

Given multi-view RGB face images of a person, we reconstruct his 3D structure by preserving his personal characteritics. We also incorporate eyes, eyelashes, inner mouth parts, and generate its blendshape, making it a high-quality and complete 3D Avatar ready to be driven.

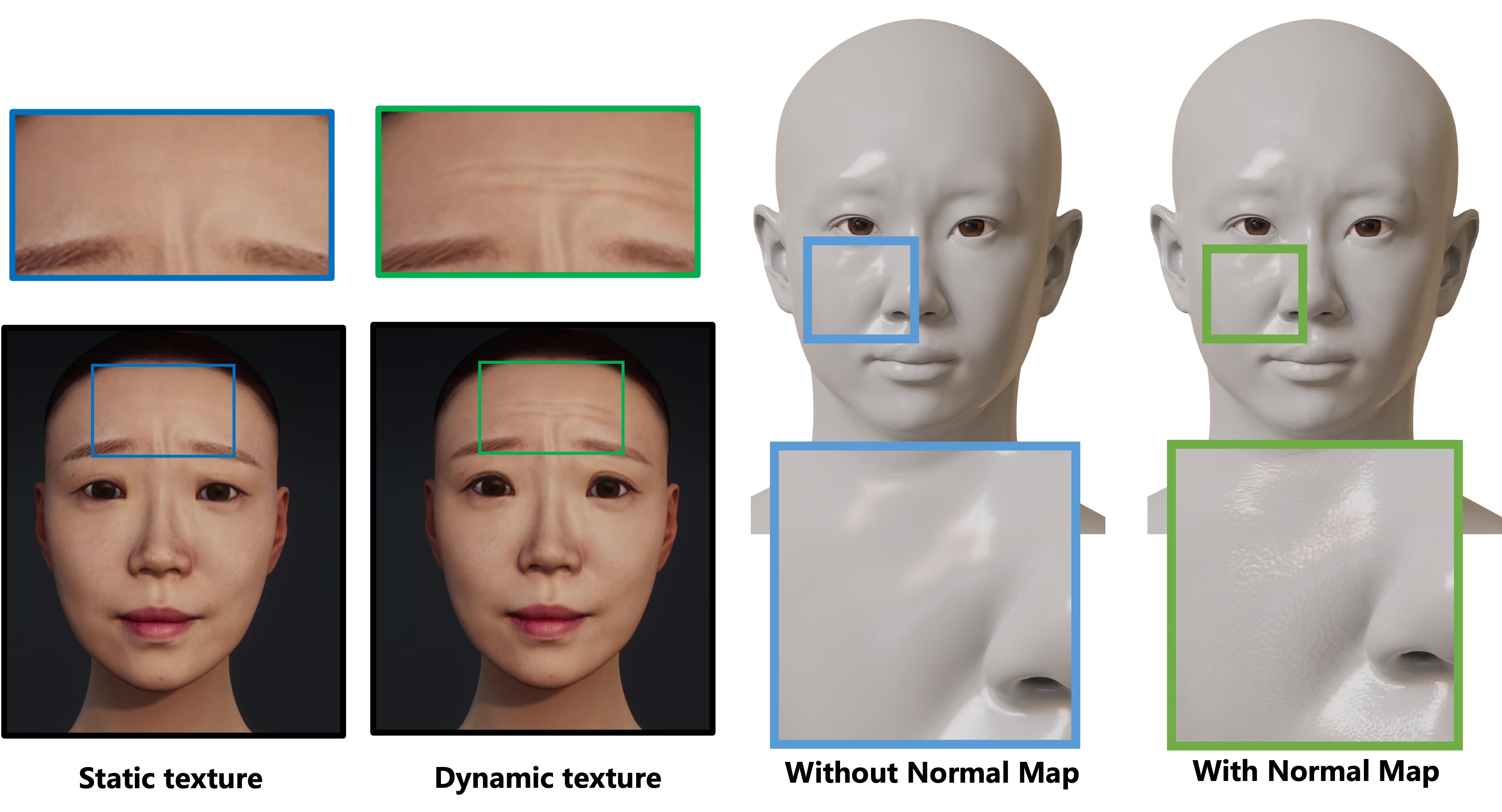

3D Dynamic Texture & Normal map Generation

This project generates multiple high-quality texture and normal maps for different expressions of 3D avatar. Dynamic texture and normal maps provide more vivid and realitic driven effects.

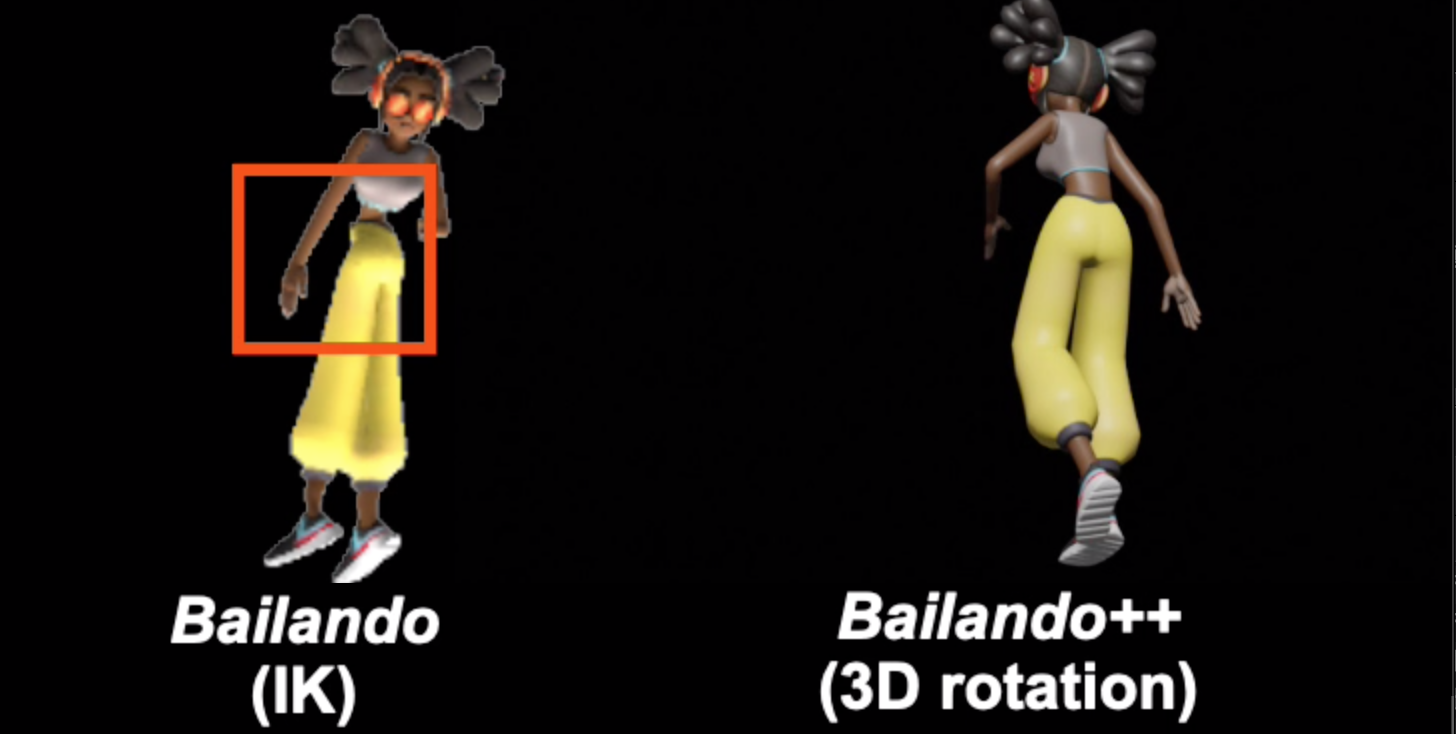

3D Avatar Driven

Image based expression retargeting project where, the model accurately transfers large-scale and fine-scale facial expressions from human facial movement to 3D virtual characters.

Selected Publications [Full List]

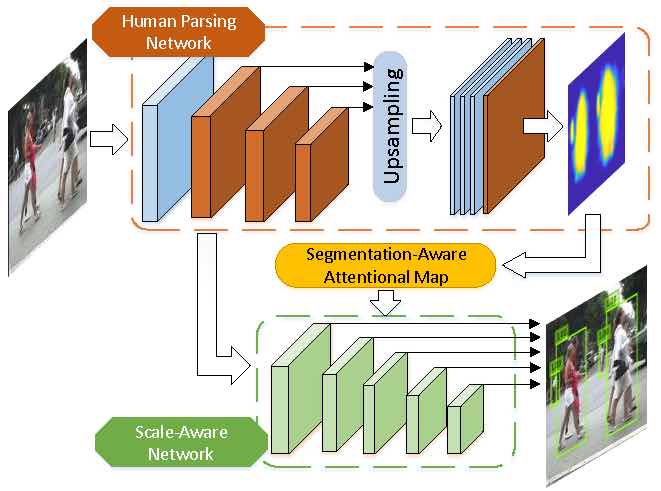

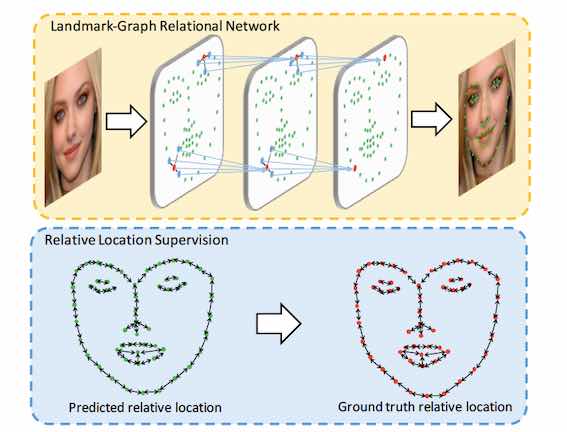

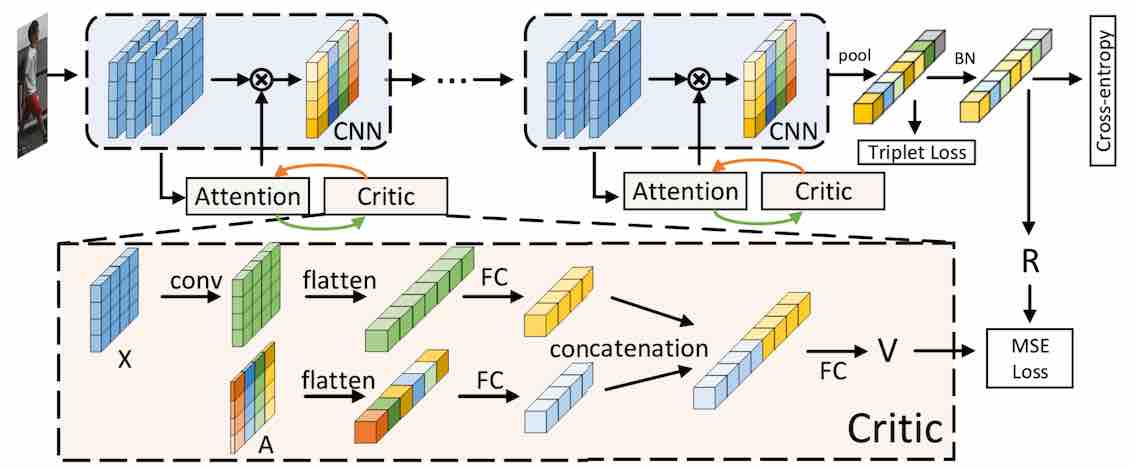

Chunze Lin, Jiwen Lu, Gang Wang and Jie Zhou

IEEE Transactions on Image Processing (TIP), 2020

[Paper]

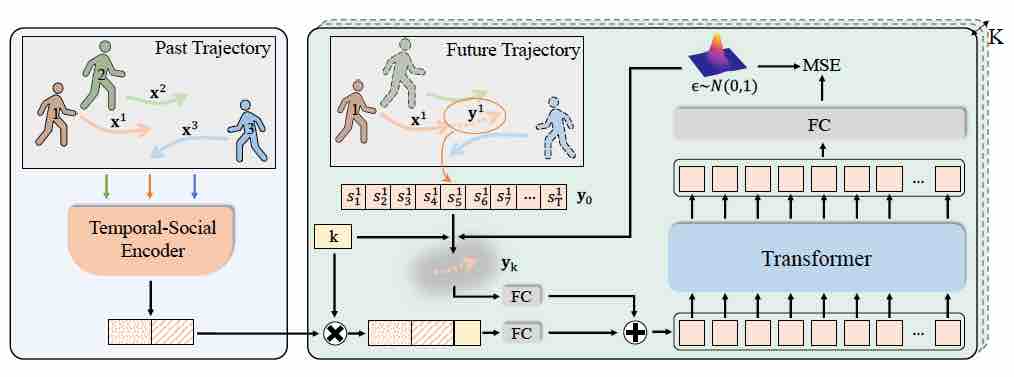

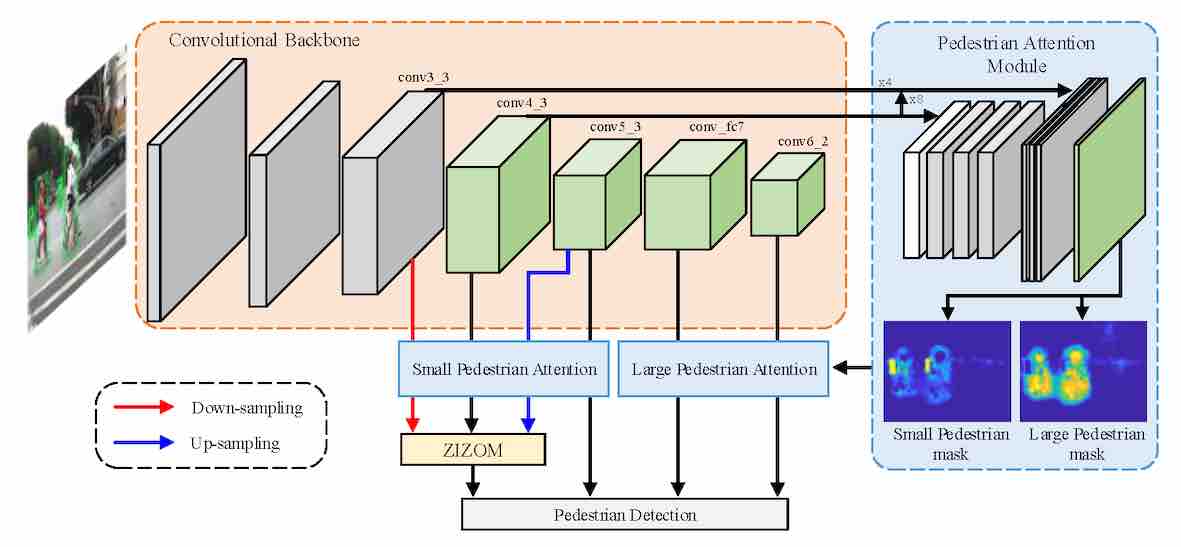

Guangyi Chen, Chunze Lin, Liangliang Ren, Jiwen Lu and Jie Zhou

IEEE International Conference on Computer Vision (ICCV), 2019

[Paper]

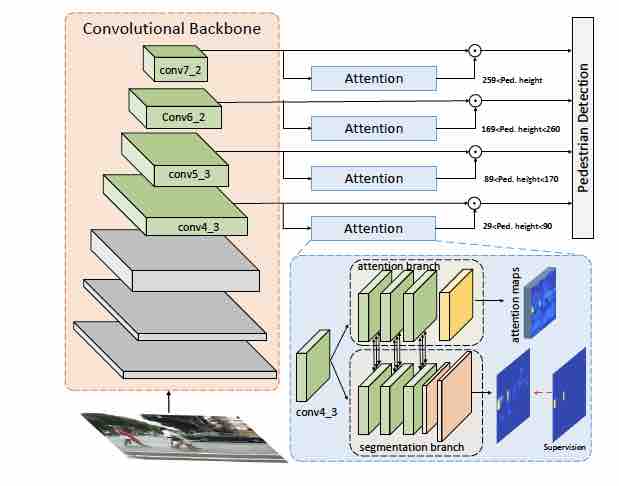

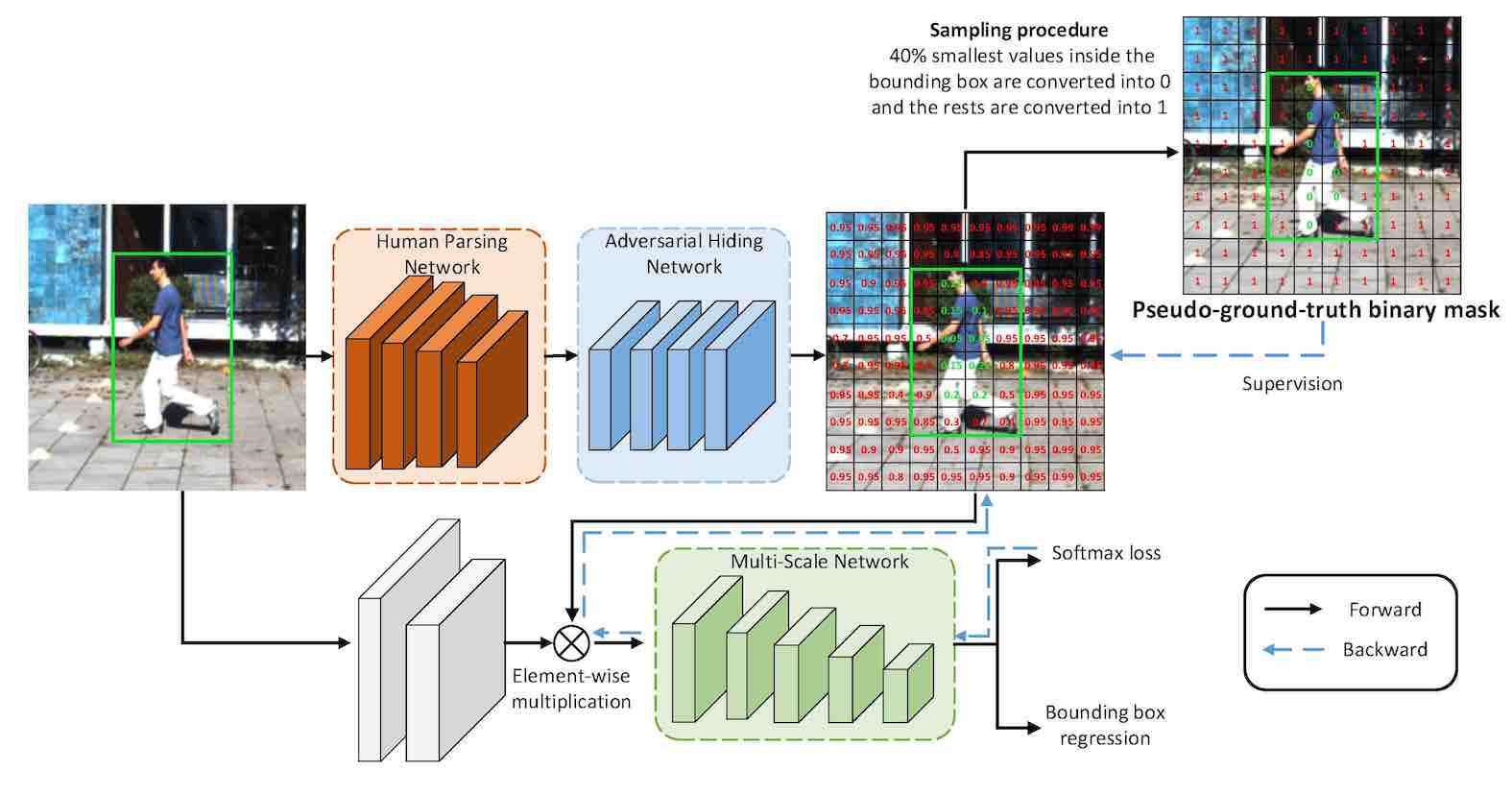

Chunze Lin, Jiwen Lu, and Jie Zhou

IEEE Transactions on Circuits and Systems for Video Technology (TCSVT), 2019

[Paper]

Honors and Awards

- 2020 Outstanding employee at Sensetime

- 2020 Excellent Master's thesis of Chinese Institute of Electronics

- 2019 Outstanding graduate Tsinghua University

- 2019 Excellent Master's thesis of Tsinghua University

- 2016-2019 Chinese Government Scholarships

Academic Services

- Conference Reviewer: CVPR, ICCV, ICME, FG, ICIP

- Journal Reviewer: TIP, IJCV, TCSVT